I’m not entirely sure what to think of this situation, but it seems to be yet another strong piece of evidence that the people behind W3schools don’t have our best interests in mind.

I’m currently in the process of revamping my CSS3 Click Chart app and I was doing my usual cursory searches for simple JavaScript methods that I often forget the syntax for. Notice what I stumbled across, as shown in the re-enactment below.

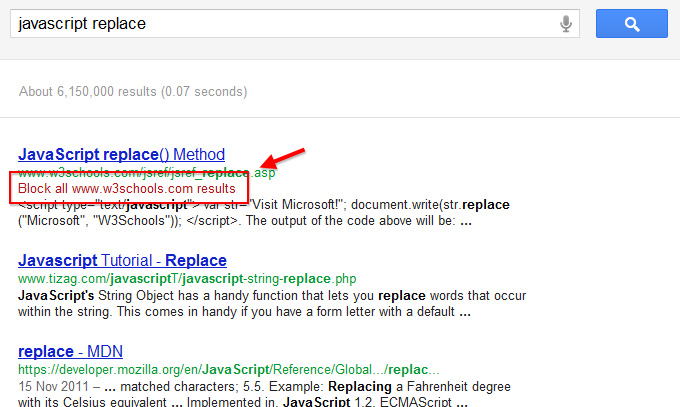

First, I did a search for something like the terms “javascript replace”. As usual, the top entry was a W3schools.com result:

I clicked the W3schools.com result (I usually don’t, but I think I was just curious or something), then I quickly changed my mind and hit the back button. So I got the following screen:

I’ve seen this option before. It’s something Google added earlier this year to help you manually filter domains from search results with the option to undo or manage the sites you’ve blocked.

So I decided enough was enough. I always see W3schools.com results at the top of the results pages, so I hit the block link to block that domain. Later I did another search for something else. Here’s what I saw:

Wow, I must not have blocked them correctly, or else something bugged out when I clicked that “block” option. Why is W3Schools.com still showing up as the first result, even after I block them?

Look closely. Do you see what’s going on?

So yes, I absolutely did block www.w3schools.com, but somehow they’ve reached the top of search results for a number of subdomains. In this case, the subdomain resembles the usual “www”. If you aren’t paying attention, you wouldn’t even notice the difference, because who’s counting W’s anyhow?

But it gets worse. If you block that one too, then you’ll get similar results, but not as cloaked:

So evidently they’re (somehow) optimizing their SERPs to also display these subdomains, seemingly to battle the fact that many web developers are probably opting to block them in their personalized results. From what I can tell they’re using “www1”, “www3”, and “wwww” (4 W’s) while the “www2” subdomain seems to have limited results.

But it doesn’t stop there. They also have results coming up using a single w and two w’s as subdomains.

What Does This Mean?

I’m not an SEO super-expert, but my gut tells me this is not ethical behaviour. It’s obvious that the folks who run this site are trying to get to the top of results at all costs.

I don’t hate W3schools. They have an okay website with some average content that can come in handy for beginners looking for quick solutions. But their site often contains errors, omissions, and incomplete info. I also think it’s ridiculous that they are at the top of search results for, it seems, virtually every web development-related search term. They have as much power in web dev search results as Wikipedia has in regular search results. That is just wrong.

I hope these new findings lead to them being penalized to some degree so we can see quality web development references like MDN and SitePoint get the SEO rank they deserve.

Am I overreacting here? If anyone has any insight into the ethics of this and how it relates to SEO, please chime in.

More Subdomains Found

After realizing that I was having the same problem on my own server (that is, random subdomains not redirecting to a canonical “www”), I thought that some of the comments might be correct in saying that it could be a result of people mistyping URLs along with wrong server configurations.

So just out of curiosity, I decided to use this domain/ip lookup tool to see what other domains are listed on the same IP as w3schools.com. Check it out for yourself by entering their domain.

I compiled the results in a JSBin you can find here. Each link sends you to Google results exclusively for the subdomains listed. Some of the subdomains (which could not possibly be user entered typos) include:

- cert

- validator

- com

- javawww

- validate

- w3

- www.jigsaw

You can click through to all the individual subdomain search results on the JSBin page.

I don’t know what to say. It seems that it would be almost impossible to get high ranking results for any of these domains, but as demonstrated above, at least a few of them do show up — especially if you block the primary domain from your personalized results.

Again, I’m not an SEO expert, but it seems that this type of thing should penalize a site.

That’s interesting, and certainly seems a bit black hat to me.

It’s hard to believe with such a prominent site that Google doesn’t know this is going on.

Wayne there are many videos about Google, where Google developers explaining the search algorithm.

What I have researched on Google search algorithm is that they are showing personalize search based on activities performed on one’s computer. They said that by this they know better what a person really wants to see in search.

And the thing about clicking back button, the Google sees it as that you didn’t liked the webpage and you didn’t got the valid information on that page that’s why Google blocked the link to not show in future search related to that keyword.

I personally think that we are now dependent on Google what to see and what not to see. Which is not right by this we will only see perfect result and will not see the failures of those who actually helps in getting success.

Not evil SEO on their part, just a rather odd server configuration. They’re just using a wildcard subdomain – http://thisistheworstsiteontheinternet.w3schools.com/ also works.

So there’s a possibility that it’s not actually them linking to it. People posting links to W3Schools could be accidentally writing “wwww.w3schools.com” instead of http://www.w3schools.com

Of course, it could still be them doing it. But my point was that it may not have been intentional.

I don’t think so. First, people generally don’t type URLs, they copy and paste them. Also, even if they did type the URL, how does that explain the “www1”, “www3”, “w”, or “ww” based search results?

Although, come to think of it, the “1” and “3” are awfully close to the “w” on the keyboard. But regardless, Google should still recognize that as duplicate content, or else the site owners should see that and set up some 301 redirects.

And now that I think about it, the fact that Google has not penalized them shows that it’s possible that the parallel pages actually have slightly different content. Would be worth looking into but might take some time.

Whoa, you’re making some huge assumptions here. First of all, “First, people generally don’t type URLs, they copy and paste them” Really? Well, it depends on how long the url is, and yeah most of the times I c/p too, but it doesn’t mean people will never type domains.

Seondly, if they are already on the ww1 domain and they copypaste, it’ll end up as a result.

How then do they end up on that subdomain? There’s several ways possible. –

They typed in the initial site wrongly, and stayed on that wrong subdomain for the rest of their browsing session

– They copypasted a link (from a comment on a site that doesn’t parse urls to click them, or from an email) wrongly, grabbing part of another world (javawww?).

– Parts of the actual site link to the wrong subdomain. I work at a fairly large website (literally hundreds of thousands of pages) and we use a lot of separate domains for testing purposes, accessing servers, et cetera. We use ww1 through ww24 to contact individual servers, bypassing the load balancer. Sometimes these links trickle through into the live site, unfortunately.

– They found it in Google. As I said we have a lot of dfferent subdomains for testing and other purposes. It’s a big SEO issue that I’m currently working on, but in the meantime Google indexes our site across hundreds (!!) of subdomains, and will sometimes seemingly randomly serve our site with one of the wrong subdomains.

And there’s probably even more ways that I hadn’t thought of.

Bottom line: my company is certainly not evil, just has a very big website, and these kinds of things happen, especially if a lot of the site is 10-year old legacy code and wasn’t built to accomodate search engines back in those days.

Right now it’s a great big hell figuring out all our TLDs (also dozens) and subdomains, redirecting or canonicalizing them to the right place while leaving the correct ones intact.

I’m not saying w3schools is not evil. I don’t know, I just rather not use the site for technical information because I do know they’re not very accurate. All I’m saying is don’t accuse them of something based on what to me seems more like poor server configuration and poor SEO insight.

In addition to my previous comment, look at their <head> tag.

No canonical link. Meta descriptions stuffed with keywords. Actual meta keywords. Huge blocks of embedded style.

Looks to me like they’re just not that into SEO, or still have a ’90s SEO mindset. Which I think supports my point of this being inadequacy rather than evil.

Then they should not have strong rankings in search results.

If nothing else, even if everything you’ve said is true, and I’m totally wrong about their motives/intentions, then their SERPs should still be very poor and this should really be a non-issue.

But this is very significant because Google is allowing duplicate content to be ranked #1 (I’m talking about content for their subdomains, not their primary domain) for some very basic web development related search terms.

Also, if you review many of their subdomains (which you can see in the update I added to the original post, and in this JSBin), you’ll see that these cannot possibly all be development-related subdomains that just “leaked” into the live site’s links.

For example:

http://www.google.com/#q=site:www.w3schools.comwww.w3schools.com

The subdomain in that search is “www.w3schools.comwww”. That’s not a valid subdomain used for dev purposes; that’s (in my opinion) an obvious attempt at manipulation of search results.

Very interesting indeed, but I’m not sure how they’re getting away with it. Google is known to penalize sites for publishing duplicate content in this way: http://support.google.com/webmasters/bin/answer.py?hl=en&answer=66359

And they’re definitely duplicating content:

http://www.w3schools.com/dom/dom_mozilla_vs_ie.asp

http://validator.w3schools.com/dom/dom_mozilla_vs_ie.asp

http://forum.w3schools.com/dom/dom_mozilla_vs_ie.asp

Where does that article state there is a penalty for duplicate content outside the standard disclaimer…

“unless it appears that the intent of the duplicate content is to be deceptive and manipulate search engine results”?

Google has a duplicate content filter to stop them showing all those sub-domains in the same search result (it’s not a penalty)…

“Google tries hard to index and show pages with distinct information. This filtering means, for instance, that if your site has a “regular” and “printer” version of each article, and neither of these is blocked with a noindex meta tag, we’ll choose one of them to list”

I think we see a flaw in the block system. After blocking one sub-domain the filter system allows the next one to show in search results. A far more complex problem for Google to solve. Should blocking a domain also block sub-domains with duplicate content?

I don’t think they know what they are doing, the site response on everything, even http://whatever.w3schools.com/ it looks like a server issue to me :-)

The question is just, why are Google indexing DC?

I think that’s because w3schools are using google-sitemap-generator. Google-sitemap-generator works as an apache module and indexes url being accessed. So if somehow these subdomains are reached on the server they got indexed and sent to google in the sitemap.

Must be a server configuration error.

I am about to start on a SEO project for a client, and their website is also available at all subdomains using one, two, three and four preceding w’s and on www1 etc. There haven’t been done any SEO on that clients web site, so it doesn’t look like it’s intentional.

The indexing of the subdomains probably happened by others linking to the unwanted subdomains.

I think it is a server configuration error.

Interesting blog, thanks

I think a more curious question here is when does duplicate content come into play if wildcard subdomains are getting indexed, and why wouldn’t w3schools get penalized for said duplicate content.

Intentional or not, it does feel as if they are getting away with something Google typically slaps wrists for.

I’m afraid that Google webmaster guidelines apply only to smaller sites.

The corner stone of SEO. Really. It is.

After all, Google is a *commercial* enterprise meaning that you can actually buy what they’re selling: rank. Being small, you have to battle it out following their rules. Rules that are put there to plug the holes the search engine has. That is, do their work so they can profit easier.

Hopefully, once the plugs are patched, SEO, guidelines for search engines and such can fade into memory and we can fully concentrate instead on the way we *personally desire* to relay the actual content and the way we suppose to help those using assistive technologies to receive the actual content.

Right now, looking at SERPs I can help but wonder: “Yes, I can see the forest, but where are the bloody trees!!!”

blocking w3schools.com here http://www.google.com/reviews/t?hl=en seems to cover all subdomains

Yes, that’s correct. But you have to do it manually like that. If you just click the “block” link, it only blocks the chosen subdomain (the common “www” version) but not the rest.

Isn’t there Google staff in the W3 consortium? Helpin a brotha out maybe? Wouldn’t be the first time for Google.

w3schools has absolutely nothing to do with the W3C. See http://w3fools.com/ as linked in the original post.

—

I agree with the others that it seems to be a misconfiguration issue rather than actual foul play. What confuses me is why this isn’t being flagged as duplicate content when ‘lesser’ sites have been penalised for less…

Everyone just needs to block this site in their results. Hopefully after thousands of people have blocked the site, Google will take notice and start penalizing them in search results.

I just quick wanted to point out an error..

“But _they’re_ site often contains _errors_”

How ironic. :) Thanks, fixed.

Some more irony:

http://www1.impressivewebs.com/web-development-search-results-manipulated/

;)

This shows that the “*” is not the trick. The trick is how to leverage that the way they do???

I’ve now fixed this problem on this site. It seems that when I moved over to Media Temple’s hosting, I didn’t pick up on the fact that wildcard subdomains resolve by default to the primary IP address.

Thanks. :)

No you’re clearly trying to manipulate things for SEO purposes.

No, clearly I fixed the problem, and clearly I would never have written an article like this if I had known that random subdomains work on my site too.

Also, if you haven’t seen the JSBin compilation, here it is:

http://jsbin.com/upamof/edit#html,live

Although I strongly suggest here that they’re doing something manipulative, I never said it was definite. I just don’t see any other logical explanation for this.

Here i got an article http://www.webopedia.com/TERM/W/WWW1.html/ it will gonna explain the phenomenon on www1, etc

Interesting and very sharp that you spotted this. Normally, the SEO trick to combine the link juice of multiple duplicate page URLs into a single address is as follow:

<link rel="canonical" href="URL of target page" />

This way you can have multiple page addresses being seen as a single page by search engines. However, it seems W3schools does not make use of this. For any new site, that would mean a severe penalty. My theory is that the domain’s age and number of inbound links have a stronger weight in this case.

I have the Personal Blocklist by Google installed, clicked “Block w3schools.com”, and it’s gone for good!

No wwww.w3schools.com or www1.w3schools.com or anything.

Wow – as an SEO – turning coder, I have to say this is disturbing on a few levels.

First, can’t stand seeing them every time at the top of the SERP’s…..and now this?

Tisk Tisk – definitely not in our best interest.

Thanks for sharing.

This actually made me say ‘wow’ out loud! Its really sneaky and why would they bother?! Clearly if anyone has blocked one of their sites they don’t want to visit their pages and so just be happy in the knowledge that the w3 will have spent time and money on this sneaky method, only to try and get people who don’t want to visit their site more annoyed!

Please stop associating “w3schools” with the w3c.

That is all.

Gosh, that’s troubling to say the least. And, no I don’t think you are over reacting.

Something tells me that Google/Matt Cutts will soon discover these practices and will punish them accordingly.

I get much help from w3school.com for my concept clarification.

Nice helping one materiel is there .

Are you on crack?

Look at the cache version for the duplicate pages. In most cases they have the same Cache URL. Google knows that they’re the same content and has associated them together.

No harm done, it’s just a server config error.

Oh and there is no such thing as a duplicate content penalty.

That page says:

Bold added for emphasis.

W3schools is a pretty old site with many informative pages and external links, so Google will tend to favor the domain for many HTML, and CSS related searches. It is likely considered an ‘authority site’, much like Wikipedia.

The multiple sub-domains is a bit odd on their part, but not really black hat. www is just a sub domain on a site and Google sees sub-domains as unique websites… this is why a new WordPress blog does not get ranked like WordPress itself, so multiple sub-domains means you wont block all of them, but it also means that your content is not in one place, ranking one site.

I agree that the lack of rel canonicals would be a real problem for a new site, but again, this is an older site with many links and content that is very on subject.

I would be more concerned about the black hat possibilities of blocking competing sites from multiple IPs to see if that influences Google’s ranking of a site you don’t like. <– A comment, not a suggestion. Crappy and very black hat. Do not do, blocks should be organic.

Only because I know everyone will comment. Yes. I know Google does not literally see a sub-domain as a separate site. I am speaking about PR / domain authority.

I agree, subdomains in and of themselves are fine. The problem is the fact that these subdomains contain duplicate content. The exact same content is being given similar SEO rank, which is very bizarre. Most of us go out of our way to avoid duplicate content. But these guys get rewarded for it…? Strange.

True, it is very odd. The only think I can think of is that this site has piles of external back-links and that outweighs the on-page messiness.

That oooorrrr… the original PR theory was based on specific sites being manually selected as authoritative. I wonder if this is proof that this occurs and some domains are simply considered ‘a VIP’ of sorts on specific topics by Google.

I’ve never heard of duplicate content on different sub domains. Oh my goodness… This is the funniest thing I’ve ever read.

It’s called mirrors, different servers, backups. These all get index’d by google.

Don’t play w3school for this. It’s not their fault google decides to index all these subdomains that contain duplicate content.

You better have a look at dochub.io, it’s a website providing fast access to documentation taken from MDN.

Perhaps suggest to Google they should block on the tld rather than the sub domain.

My preferred alternative would to remove w3schools all together, but that won’t happen :)

That wouldn’t be very helpful for sites like Tumblr, Blogspot, et al.

Put down your torches, maybe they are just parallelizing downloads. http://code.google.com/speed/page-speed/docs/rtt.html#ParallelizeDownloads

No they are not. Maybe you should have taken 10 seconds to look at their source code?

I work with the websearch-team here at Google. I just wanted to drop a short note about this particular issue. While using wildcard subdomains is suboptimal, it is not something that we would generally penalize a website for. Our websearch algorithms are pretty good at recognizing these kinds of technical issues – and generally solve them automatically by only showing one of the URLs in the search results. The most common variation of this is when a website serves its content under both the www subdomain as well as under the naked domain (non-www). Doing that is not malicious and not something that we would generally take manual action on.

You can find out more about how to solve duplicate content issues like these in our help center at http://support.google.com/webmasters/bin/answer.py?answer=66359 and http://support.google.com/webmasters/bin/answer.py?answer=139066 . Solving these issues would make it easier for us to crawl and index a website (fewer duplicates = less unnecessary crawling and indexing). If this was your site, I’d recommend fixing those issues as you might fix other issues on your site :).

I’ve passed a note along about the “block on back” functionality with regards to blocking a whole domain. That wouldn’t always make sense (eg sites hosted on Tumblr, Blogger, or WordPress), so I don’t think it would make sense to always do that.

Hi John, nice to see you posting again. The problem I have with your explanation is that not every one of those subdomains would have the same link profile or the same authority for the given search, even with the relevancy being identical, thus they would not all rank #1 just because one variation of the subdomain is blocked. So, one ponders why it would actually occur. Assuming that another variation actually did have the ranking power needed to boost it to the #1 spot, then it would surely show as the ‘second’ listing in the unblocked SERP, as it would on any other site. There are so many oddities with this particular find that it boggles the mind in fact.

The fact that w3schools is the worst resource ever in the first place does not even matter in this regard.

Use the Personal Blocklist Extension for your browser and you won’t see a thing because you can block the whole domain.

Compliment appreciated. :)

i dont know that much about SEO on the knowlege i have this is actually bad for seo and google punishes this, but on the other hand w3schools is always on the top of the searches. but i guess thats for beeing linked often.

i know the w3fools site and i understand some of the points, still i liek w3schools and i think its the single best place FOR STRAIGHT TO THE POINT html learning, no complicated mile long specs, no gutter, just short examples and thats what ppl need when they want to learn html.

I just block wc3schools from my results using this chrome plugin:

https://chrome.google.com/webstore/detail/personal-blocklist-by-goo/nolijncfnkgaikbjbdaogikpmpbdcdef

Not overreacting. w3schools’s dominance is stealing minutes of my time getting better at html5/javascript dev. With Lua and Python I got used to the first google result being very good, I constantly accidentally click w3schools links when looking for javascript knowledge.

Thank you for the wonderful informaton and its been very useful to me and now i am totally aware of w3schools stuffs now . Thank you for informing .

There is no guarantee of google search engine. In just fraction of mind we may end up on some other page and won’t be able to see in future. For me i think yes search results are being manipulated according to visitors.

Thank you for the wonderful information Keep Up Your Work.

That’s interesting, and certainly seems a bit black hat to me.

It’s hard to believe with such a prominent site that Google doesn’t know this is going on.

AOL’s end-to-end platform increases efficiency, reduces waste, and improves performance. AOL has torn down the garden walls, giving advertisers and publishers full control of their own data, choice of tools, media – and their business.24*7 AOL mail support for USA and more by availing a paid AOL help mail account.To reactivate, dial AOL login number and allow the aol Sign up technician to help you out.

I’m pretty sure they are as every now and then an algorithm update comes around and messes with the rankings.